Artificial intelligence (AI) and regional revitalization

As frequently mentioned on this website, Awaji Island is experiencing a declining birthrate and aging population. As in many other regions of Japan, achieving regional development and a happy life for local residents poses a major challenge. In response, the Japanese government has presented several policies. One of them is for residents to live in a concentrated area rather than spread over wide distances. Another is the use of the latest science and technology. In particular, the facilitation of communication through IT technology makes it easier for people live in remote locations. Every passing day, the number of people using Social Networking Services like Facebook continues to grow.

The rapid progress of artificial intelligence (AI), holds great promise as a solution to these social issues. For example, responding to health problems is a challenge that especially affects the elderly living in areas where labor shortages are acute. Being able to get answers to such situations through smartphones undoubtedly helps even in rural areas where medical facilities and personnel may be hard to find.

What exactly is this technology that allows AI to answer our questions? Chat GPT may be the best known example of current AI (artificial intelligence) technology, developed by the American company Open AI. This software understands human language and responds to various questions in multiple languages including Japanese, with sophisticated translation capabilities. Such programs are called large-scale language models (LLMs), and they themselves store a variety of knowledge, from the meaning of words to grammar and usage of those words. The most advanced versions can create pictures, compose music and even write scientific papers.

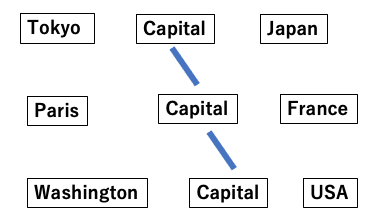

How did this level of advanced software become possible? A special feature in the October 2023 issue of Nikkei Science magazine answers this question, titled “Large-scale language models: AI that changes science.” In particular, the article “What are large-scale language models?” by Masaaki Demura goes into detail. Readers are recommended to read the paper for details, but here are the main points. To be able to converse with humans, computers need to memorize terms, grammar, and other rules. At first, they were tasked to memorize whole words and example sentences, but if the number of example sentences was insufficient, such programs were unable to give satisfactory answers. Therefore, in the next stage, computers memorized the relationships between different words as well as the meanings of the words. For example, Tokyo, Washington, and Paris may all be similarly categorized as world capitals, but belong to different countries and are different sizes and environments. If we input the relationship between Tokyo and Paris into a computer, the answer will be that both are capitals of countries that belong to Japan and France, respectively. Such relationships are easily clarified by comparing each element (Fig. 1).

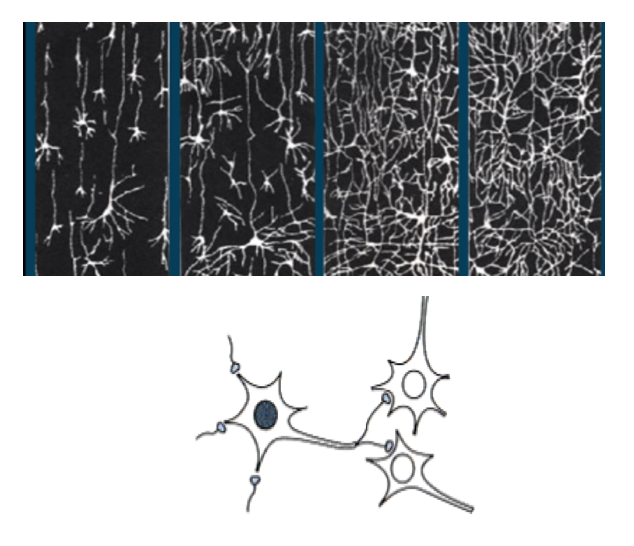

Each word comes with a variety of similar elements, with varying degrees of relationship between elements. Organizing words according to such elements is called “distributed notation”. If there are 3 elements, it is a 3-dimensional element. A network is thus created among different words by connecting the various elements of each word through distributed notation, finally resulting in a sentence. In other words, the various sentences we speak are made up of words that form networks. Large-scale language programs are able to navigate this network even if each word has many elements. In our heads, nerve cells in our brains form a similar network, and when we think of a certain sentence, one connection in this network is formed. Even if similar words are used in different sentences, the state of the connections (network) will be slightly different.

Fig 1 . Words and their elements.

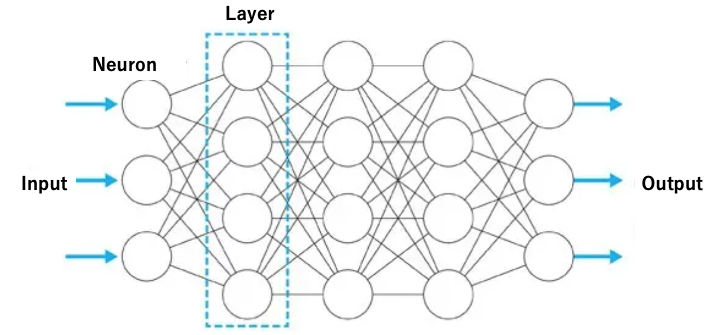

LLMs access a vast database of human conversations and note the patterns of connections therein. Such models “learn” which connection paths are easy and which are difficult to form. By ranking and memorizing the probability of connecting paths in advance as either easy or difficult connections, LLMs are able to speak more smoothly. The multiple layers that form the neural network in the human brain makes it possible to create increasingly complex sentences. Similarly, by creating a LLMs with a network of words over multiple layers, the creation of increasingly sophisticated sentences becomes possible. This process through which LLMs learn and improve their networks is called “deep learning”. Although this is a vastly simplified explanation, it outlines the basic operations of LLMs.

Fig. 2 Neural net work in our brain and connections of each neuron.

Fig. 3 Net work of neurons in the deep layer within our brain.

Deep learning is expected to make computers (programs) smarter and smarter, with many predicting the rise of mechanisms free of the flaws that mar human intelligence. Just as human intelligence grows with new experiences and learning, LLMs also get smarter, hinting at the emergence of systems that humans can no longer predict.

This superior intelligence created by computers is now known as artificial intelligence, a phenomenon that may be of great use for humanity. Many fear that human jobs will be taken over by computer-based artificial intelligence. However, as mentioned at the beginning, in a society with a declining birthrate and an aging population, it will likely become an indispensable way to sustain society. Yesterday, it was reported that nursing care machines that combine artificial intelligence with other medical devices are already being made in China. At Osaka University’s School of Pharmaceutical Sciences, research is reportedly underway to use AI to create new medicines. Agricultural generative AI is also being developed to determine the best time to sow seeds, as well as grow and manage crops.